Join us as we uncover the latest insights into the European Union’s (the ‘EU’) ground-breaking Artificial Intelligence Act, in the wake of the leak of its most recent consolidated version on 26 January 2024 [i]. We delve herein into the core objectives, risk-based categorisation, and key provisions of this text, originally proposed by the European Commission on 21 April 2021 and now nearing adoption (the ‘AI Act’).

Starting with its primary objective, the AI Act aims to enhance the functioning of the internal market by establishing a uniform legal framework for the development, marketing, and use of artificial intelligence systems (the ‘AI systems’) within the EU. This framework is to be applied in accordance with EU values, to ensure a high level of protection of health, safety and fundamental rights enshrined in the Charter of Fundamental Rights of the EU.

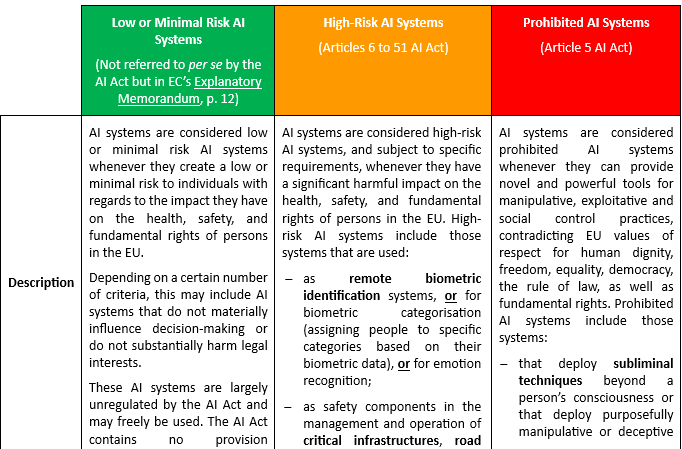

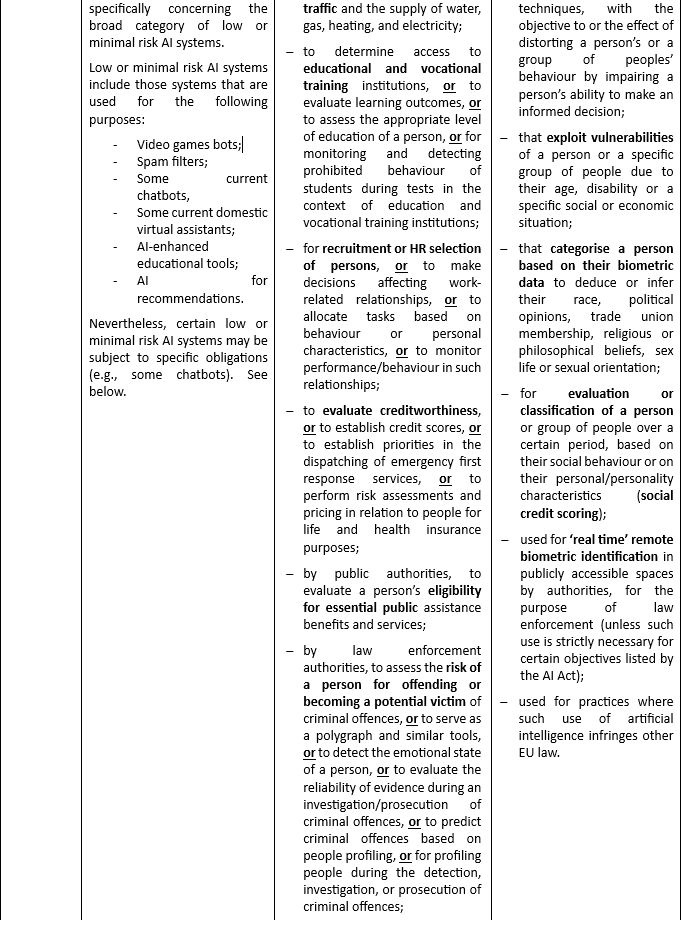

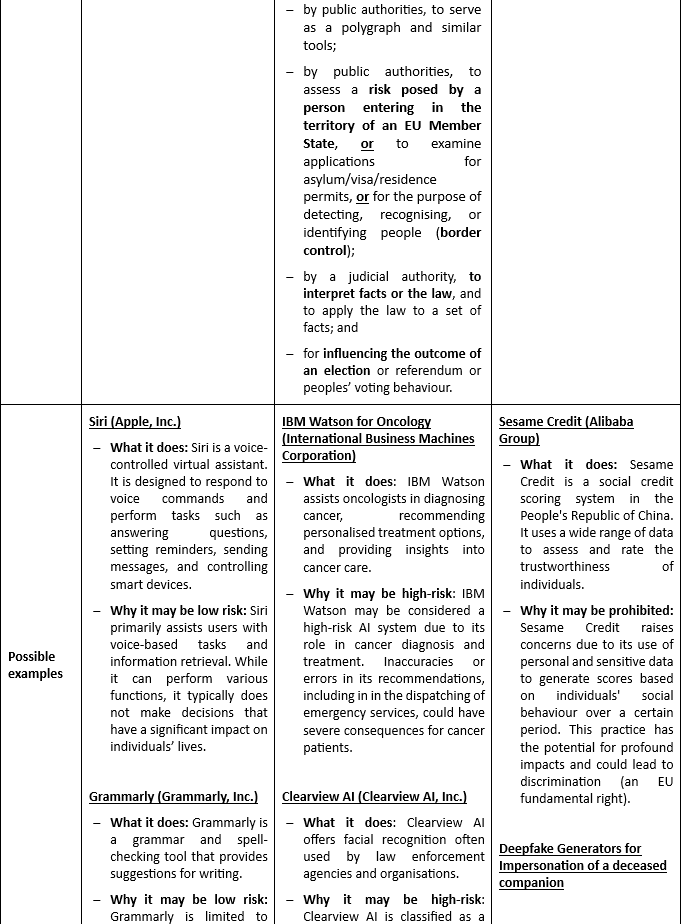

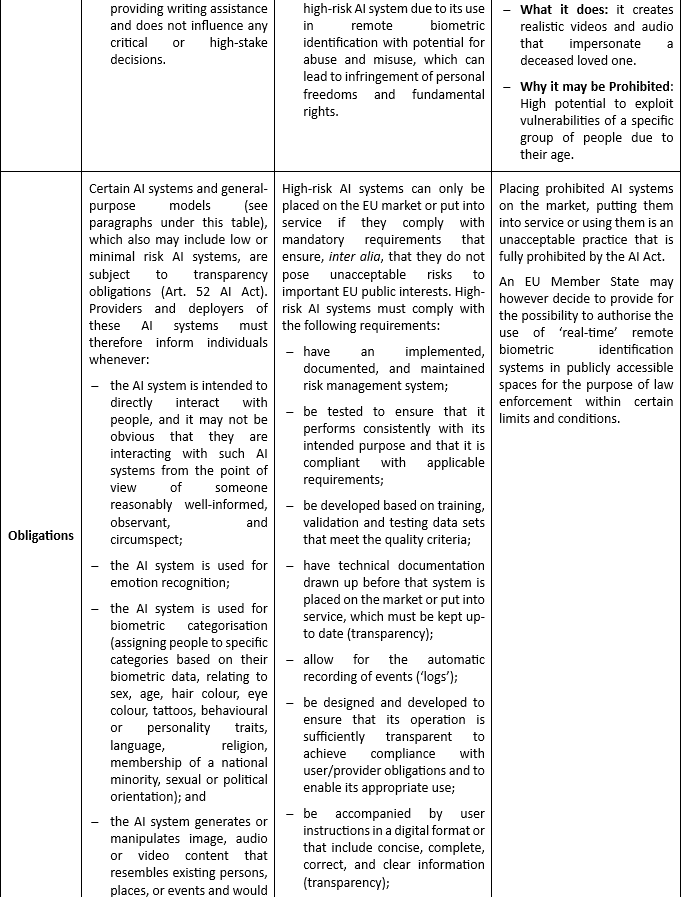

The AI Act introduces a risk-based approach for AI systems, similar to the GDPR’s data protection rules. This approach distinguishes three AI systems, based on the risk posed by their usage: Low or Minimal Risk AI Systems, High-Risk AI Systems, and Prohibited (or unacceptable) AI Systems. Occasionally, a fourth level is also mentioned in some papers published online: the ‘transparency risk’ or ‘limited risk’.

This risk differentiation stands out from Recital (14) of the AI Act, which currently states the following:

“It is therefore necessary to prohibit certain unacceptable artificial intelligence practices, to lay down requirements for high-risk AI systems and obligations for the relevant operators, and to lay down transparency obligations for certain AI systems”.

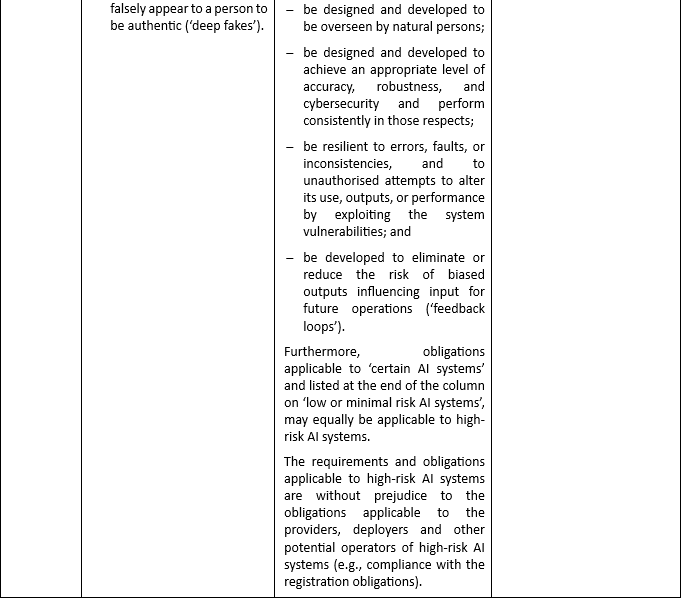

Below is a table detailing the three risk levels of AI systems, along with examples of AI systems for each risk level, and listing some requirements and obligations associated with each of those levels.

The AI Act also briefly touches upon the so-called general-purpose AI systems.

These AI systems are based on or integrate a general-purpose AI model with the capability of serving a variety of purposes (Article 3(44e) AI Act; Recital (60d) AI Act). However, the AI Act contains no specific requirement or obligation for general-purpose AI systems. Instead, it focuses on general-purpose AI models, which serve as a foundation of these general-purpose AI systems. This may include some of OpenAI’s ChatGPT models.

A general-purpose AI model may be placed on the market in various ways, including through libraries, application programming interfaces (APIs) or as a direct download (Recital (60a) AI Act). They allow for flexible generation of content (such as text, audio, images, or video) and can readily accommodate a wide range of tasks (Recital (60c) AI Act).

General-purpose AI models are essential components of some AI systems, but they do not constitute AI systems on their own – notably because they lack further components to be considered as such, for example, a user interface (Recital (60a) AI Act). Therefore, contrary to what some papers may suggest, they are not inherently an AI system, nor do they fall in one of the existing three risk categories of AI systems.

Providers of general-purpose AI models are nevertheless subject to specific requirements and obligations (Article 52c AI Act), including draw up technical documentation, a policy to respect EU copyright law, and a detailed summary about the content used for training. Furthermore, since general-purpose AI models may be integrated or form part of many different AI systems (including high-risk AI systems), they may pose a systemic risk. In such cases where the AI model meets the criteria to be classified as having a systemic risk, providers are subject to additional obligations (Articles 52d and 52e AI Act).

When the provider of a general-purpose AI model integrates their model into their own AI system, the obligations provided for general-purpose AI models may apply in addition to those for AI systems (Recital (60a) AI Act).

In conclusion, the EU’s AI Act represents a significant step forward in the regulation of AI systems. Its goal is to bring the rules for the development, marketing, and use of these systems into uniformity within the EU while maintaining a high level of protection for health, safety, and fundamental rights of EU citizens.

It also presents new opportunities and challenges, for which MOLITOR’s Media, Data, Technology, and IP team can offer its comprehensive expertise. On the one hand, we can assist clients in understanding and complying with the AI Act, particularly with high-risk AI systems and transparency obligations. On the other hand, we can assist you in either exercising your personal data rights or complying with the GDPR’s obligations relating to personal data in the AI field. This includes the drafting of terms and conditions for the deployment and usage of AI systems, as well as privacy policies and data processing agreements.

Please contact us should you require our assistance in complying with and navigating the complexities of AI regulations.

[i] Consolidated version dated 26 January 2024 of the Artificial Intelligence Act

Categories

AllLegal Topics

Banking & Finance

Business & Commercial

Corporate & M&A

Employment, Pensions & Immigration

Insolvency and restructuring desk

Insurance

Litigation & Dispute Resolution

Media, Data, Technologies & IP

Real Estate, Construction & Urban Planning

Tax & Estate Planning

MOLITOR firm news